The “Campus Spatial de l’Université Paris Diderot” invited me to give a talk entitled “Histoire du traitement des données spatiales” on September 20 2013. This was a personal account, closer to a random walk in the fog than to a true historic perspective on the subject. The text below is the transcription of the second part of this talk, further parts will appear later on this blog.

Archives

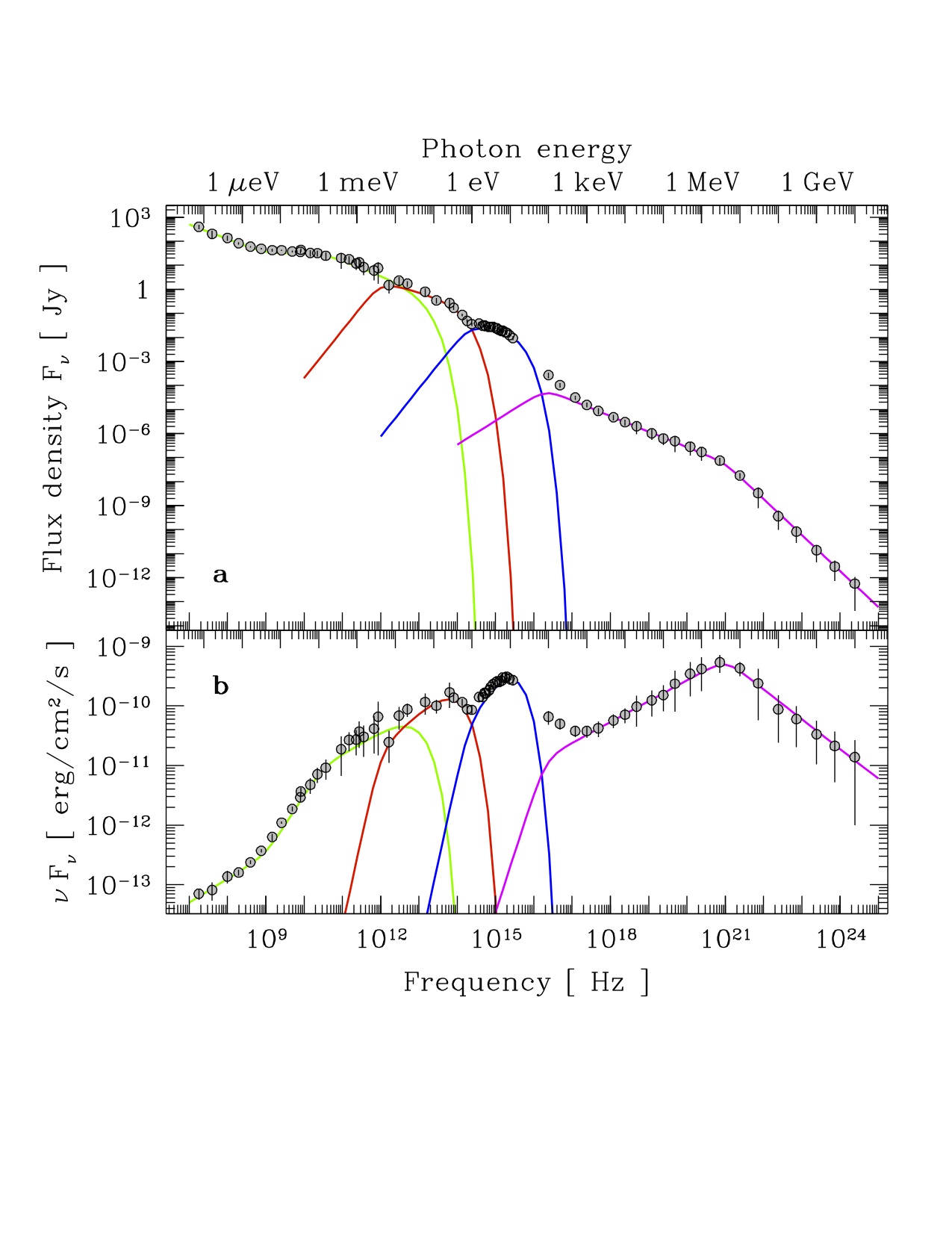

Keeping data in a form that is suitable for uses other than that of the the primary observer is a further very important aspect of observatory like operations. The long lasting value of astronomical data is striking in astrophysical research that uses data covering a very large part of the electromagnetic spectrum. This so-called multi-wavelength approach to the physics of cosmic objects became important in the 1980's, in particular for the study of active galactic nuclei (AGN). AGN have been discovered first in the 1940s as the bright blue core of a number of galaxies, the Seyfert galaxies, and then, in 1963, when combining radio and optical data on peculiar "stars", the quasi stellar objects or QSOs. These objects were found later to be also the cores of galaxies. Observations of AGN covering the electromagnetic spectrum from radio waves to gamma rays revealed a powerful emission (QSOs can be as bright or brighter than whole galaxies), such that the energy flux observed per decade of frequency is roughly the same over many decades of the spectrum, a feature that is yet to be understood. Figure 1 shows the multi-wavelength spectrum of the bright QSO 3C 273, an object we studied over many years (for a review on 3C 273 see Courvoisier T.J.-L., A&ARv 9, 1, 1998). The emission of these objects in different regions of the electromagnetic spectrum is due to very different physical processes including for example radiation from heated dust or that emitted by relativistic electrons spiraling in magnetic fields. The rough constancy of the emitted energy flux per decade of photon energy translates in the fact that each emission mechanism emits roughly the same energy per unit time as the others. The reasons behind this apparent conspiracy remain unclear, at least to the author of these lines.

FIG 1: The multiwavelength spectrum of 3C 273. The top panel gives the flux observed per unit of frequency, while the bottom panel gives the flux per logarithmic frequency interval (proportional to the flux per decade of photon frequency). The coloured curves indicate the different emission components, see Courvoisier T.J.-L., A&ARv 9, 1, 1998 for a discussion.

Archives have to reside on some machines. Where these machines reside and who controls them has been a sensitive issue. Indeed as long as one or very few copies of the data exist, those in charge can decide physically who may or may not access the data. The perceived need for this kind of control, together with the impression that only ESA could guarantee financially and technically the integrity of ESA satellites' data led ESA to impose that the XMM-Newton data reside in their hands. In this way ESA could implement itself the data access rules, had the impression that it would be able to fund the data related work over a long period of time, and could benefit from the visibility that goes together with accessing the data in a easily recognisable environment. ESA, however, did not have the same feelings about the software to be developed to analyse the data, nor did it have the necessary competence. ESA therefore trusted the task of data analysis development to scientific teams (Leicester University in particular), had data products generated on ESA's sites as well as on outside sites, and required that analysis products, whether produced in or out of house, and data could only be accessed through ESA's facilities. This led to an overly complex system with a multiplicity of interfaces between widely separated groups and lots of data movements. The baroque construction that resulted could have been spared if the feeling of power attached to the physical presence of data on ESA's premises been recognised as possibly unhelpful. It is ironic that a decade before designing the XMM-Newton system the same ESA had let the EXOSAT data move out of Europe and into the US for lack of interest. The EXOSAT data could thus for a number of years be accessed only through NASA facilities.

That European institutes outside ESA are able to deal with satellite data was demonstrated in the case of ESA's INTEGRAL mission. This story will be the object of the fourth of these personal contributions to the history of data analysis.

The actual location of data within science ground segments now looses its edge. Data from ongoing observatories are rather modest in comparison with present day data storage capabilities and can be located anywhere, sometimes even on personal computers. As a consequence, keeping many copies of the data became possible and the feeling of power associated with the actual act of data keeping became less marked. Some missions, however, are generating very large data sets by today's standards. These require, at least for a time, ad hoc facilities, which are not within reach of academic institutions. The physical requirements in terms of space, cooling and electricity to keep these large data sets are also such that the interest to have them at hand decreases somewhat. But it will be interesting to see whether tensions around copies of large upcoming data sets do develop. This could be the case for projects like the Cerenkov Telescope Array CTA project now being designed.

Data are one thing. Software to access them, to understand the formats used, and to analyse the data is another matter. The analysis relies heavily on a deep knowledge of the instrument properties and idiosyncrasies (see the first part of this series). The resulting software is therefore often complex and difficult to produce. And data without this software are useless. As much care must therefore be given to software, calibration data and algorithms as to the data themselves. This renders all impressions of power and command for those in charge of the data somewhat academic.

The data, and software, must be kept for a long time too. Discoveries over the last decades have shown that cosmic phenomena take place over all timescales from billions of years down to milliseconds. Since we don't know what is yet to be discovered, we do not know how relevant the data we acquired at a given epoch might become. An interesting example is given in fig. 2, which shows the light curve of the bright quasar 3C 273 as it was retrieved in the 1980s from photographic plates over a timespan of a century. These data show variability on many timescales that we still fail to understand. But even if we do not understand the cause of the variations, their existence show that the object must be very compact, smaller than the light traveled during a typical variation event. Thus data acquired with telescopes that were the state of the art in the late XIXth century on objects that nothing distinguished from rather uninteresting large numbers of stars became suddenly central to understanding a major property of quasars.

FIG 2 The light curve of the quasar 3C 273 over a century from archived photographic plates. From Angione R.J. and Smith H.J., AJ 90, 2474, 1985.

Of much higher importance than any perceived or real power associated with data or software is the responsibility that the teams in charge have. Satellite data were acquired at great expense of money and effort. The development and the operation of complex space missions, as well as modern astronomical instruments in remote deserts, require ideas, ingenuity and immense quantities of work. A very rough estimate of the manpower behind a mission like INTEGRAL indicates that some 10'000 man-years were necessary to complete the mission. Only with such tremendous efforts do instrument function with no attendance, no exchange possibility (with the exception of the Hubble Space Telescope, but there the replacements also came at huge expenses), function over long periods of time, and deliver reliable data. Those in charge of the data during and after a mission must be aware of this heritage and of the trust that potential future users have in their work and effort. The example of the century long light curve of 3C 273 illustrates that potential users may come several generations later.

Public archives are treasures for science. Traditionally in the astronomical community data have some form of proprietary protection for a limited period of time, usually one year, and become publicly available thereafter. This arrangement gives the scientists who have proposed a given observation on some instrument the possibility to exploit the corresponding data before anybody can seize the data. Archives therefore not only serve unexpected purposes years after having been obtained, they also open up many possibilities for researchers in institutes that have not had the opportunity to develop instruments. Data are cheap to obtain through the web now, scientific processing and analysis software is readily available, free and, provided it was carefully engineered, user friendly. Groups wanting to achieve internationally competitive work using public data from major instruments therefore need a modest computing facility, access to internet, a solid knowledge of the field, some originality and the will to learn how to use the data. These skills can be acquired through courses, exercises, collaborations and visits to institutes with experience. This route was followed very successfully by groups in Kiev who were able with a modest Swiss funding to acquire the necessary hardware, and to organise courses in high energy astrophysics and practical exercises using XMM-Newton and INTEGRAL data. This programme was realised in collaboration with the ISDC (see the fourth part in this series) in Geneva. There resulted a lively community in Kiev under the name of VIRGO (http://virgo.bitp.kiev.ua/) and a number of well formed students, who could find PhD or postdoctoral positions in various European institutes.

The well recognised value of archived data for broad scientific purposes sometimes far from the original idea behind a specific observation has led to varied attempts to facilitate the approach to the data. Projects were devised to allow general users to combine data pertinent for a given research from numerous facilities. This laudable aim led to some very ambitious programmes. The ESIS programme of ESA, for which I had the privilege to serve as chair of an oversight committee, was one of these. Large sets of requirements were established and contracts to satisfy them were awarded to software industries. It proved, however, that the ambitions were much above what was feasible at the time (mid 1990s) both in term of hardware capacities and in term of matching the idiosyncrasies of the sometimes widely different data (in terms of angular, energy and temporal resolution for example) and data quality. ESIS therefore never led to satisfactory results. Much more modest approaches proved more fruitful. They set for themselves simple goals like, for example, to standardise names and conventions for the data. This required already considerable discussions that lasted for years involving institutes that were sometimes far apart in their way to develop software systems. This is the approach followed by the Centre de Données Stellaires, CDS, in Strasbourg. It is interesting to see that efforts with the same general aims keep flourishing and little by little allow users to indeed use data from several instruments to tackle the questions at hand. This went under the umbrella of "Virtual Observatory", VO, with a very significant level of funding for a number of years. Going back to the study of AGN and their emission components, this is bound to continue to yield valuable insights.

Efforts to assemble data from many different sources, as we have seen in astronomy, are actual in many areas of science. There are now, for example, intense discussions in medical sciences aiming to collect all relevant medical and biological data pertaining to individual patients and to make them available in a suitable form to health professionals. This reminds of the process astronomers went through and are still struggling with. I trust that these are not the only fields in which combining data of different origins and quality is expected to be fruitful and hope that discussions across the boundaries of scientific disciplines could help finding efficient paths through the many obstacles hidden in data jungles.